Raspberry Pi vs NYC: RPI5 ANPR

Published on

Can a Raspberry Pi 5 Detect Number Plates in New York City?

Welcome back to our deep dive into the capabilities of the Raspberry Pi 5! In this blog post, we’ll explore whether this tiny powerhouse can effectively spot number plates amidst the bustling streets of New York. If you missed Part 1 where we built and set up the Raspberry Pi with an AI hat, be sure to check that out first. Today, we’ll take a closer look at running pre-trained models, creating a dataset, and training a segmentation model for number plate detection.

What We Will Cover

- Running Pre-Trained Models

- Creating a Dataset and Training a Model

- Converting the Model for Halo

- Running Inference on NYC Driving Videos

- Future Steps and Considerations

1. Running Pre-Trained Models

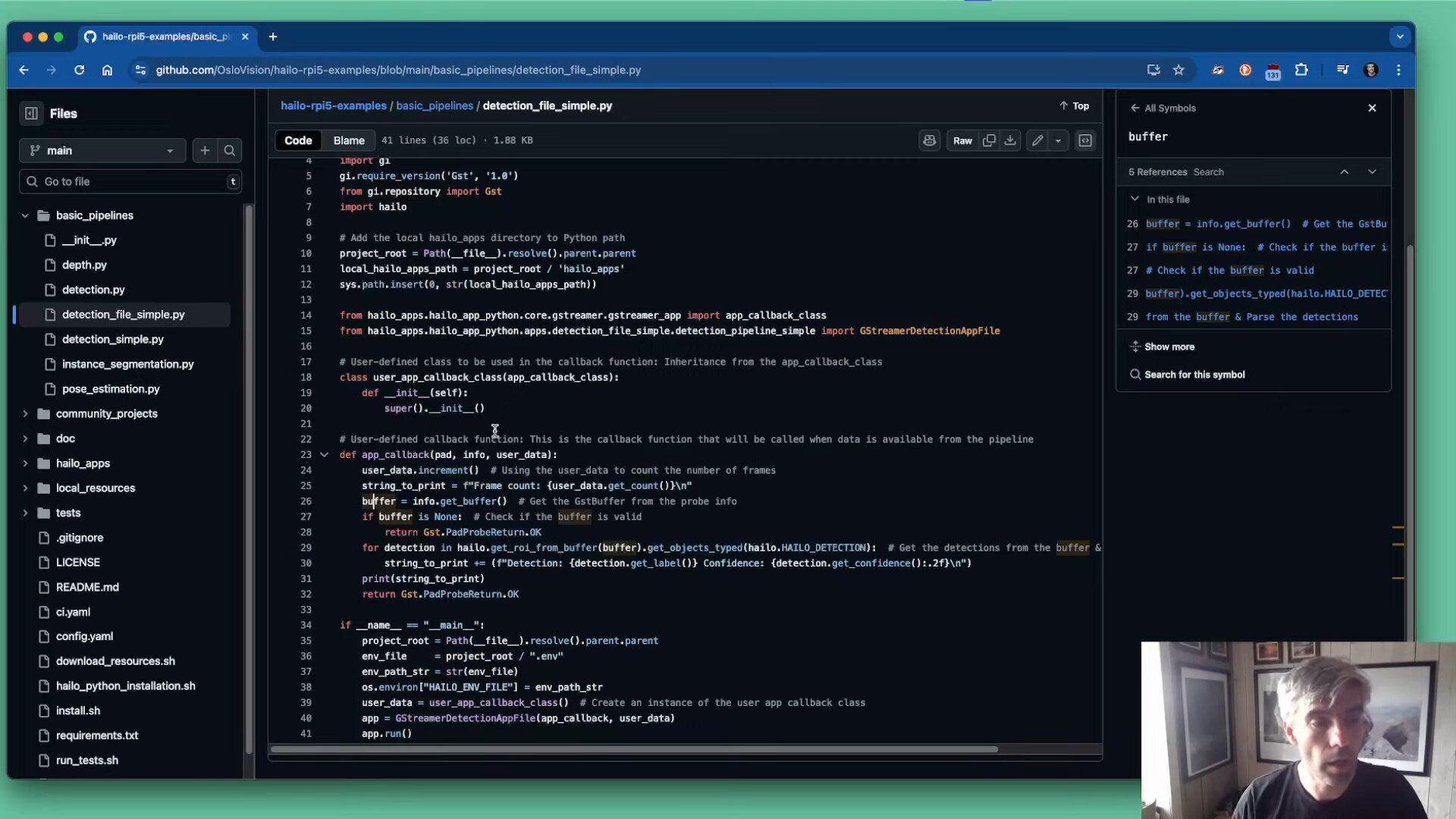

To kick things off, I forked the Halo Raspberry Pi 5 examples repository. This repository is a fantastic resource for anyone looking to get started, offering numerous examples to help you understand how everything works.

In addition to the examples repository, I also forked the hailo-apps-infra repository and used it as the main codebase for the experiments in this demo. This provided a solid foundation for building and customizing the detection pipelines tailored to our use case.

I looked into running a pre-trained model first to get a feel for the performance and capabilities of the Raspberry Pi 5 with the Halo AI accelerator. The model I chose was a general object detection model that could identify various objects in video frames, I used a promotional video for a motorboat as a test input. The results were quite impressive, with the Raspberry Pi handling the video stream and performing inference in near real-time @ 25fps.

Key Steps:

- Pipeline Creation: I created a new pipeline called Detection File Simple that runs a pre-trained model.

- Input Sources: This model can accept various input sources, such as a file or a camera (USB or Raspberry Pi camera).

- Inference Output: The model processes video frame by frame, printing out results to the terminal and overlaying detections in an output video file.

detection file simple

Next, I decided to shift my focus towards a more specific task: number plate detection. This required creating and training a custom dataset, which brings us to the next section. It also involced looking at instance segmentation rather than just bounding boxes.

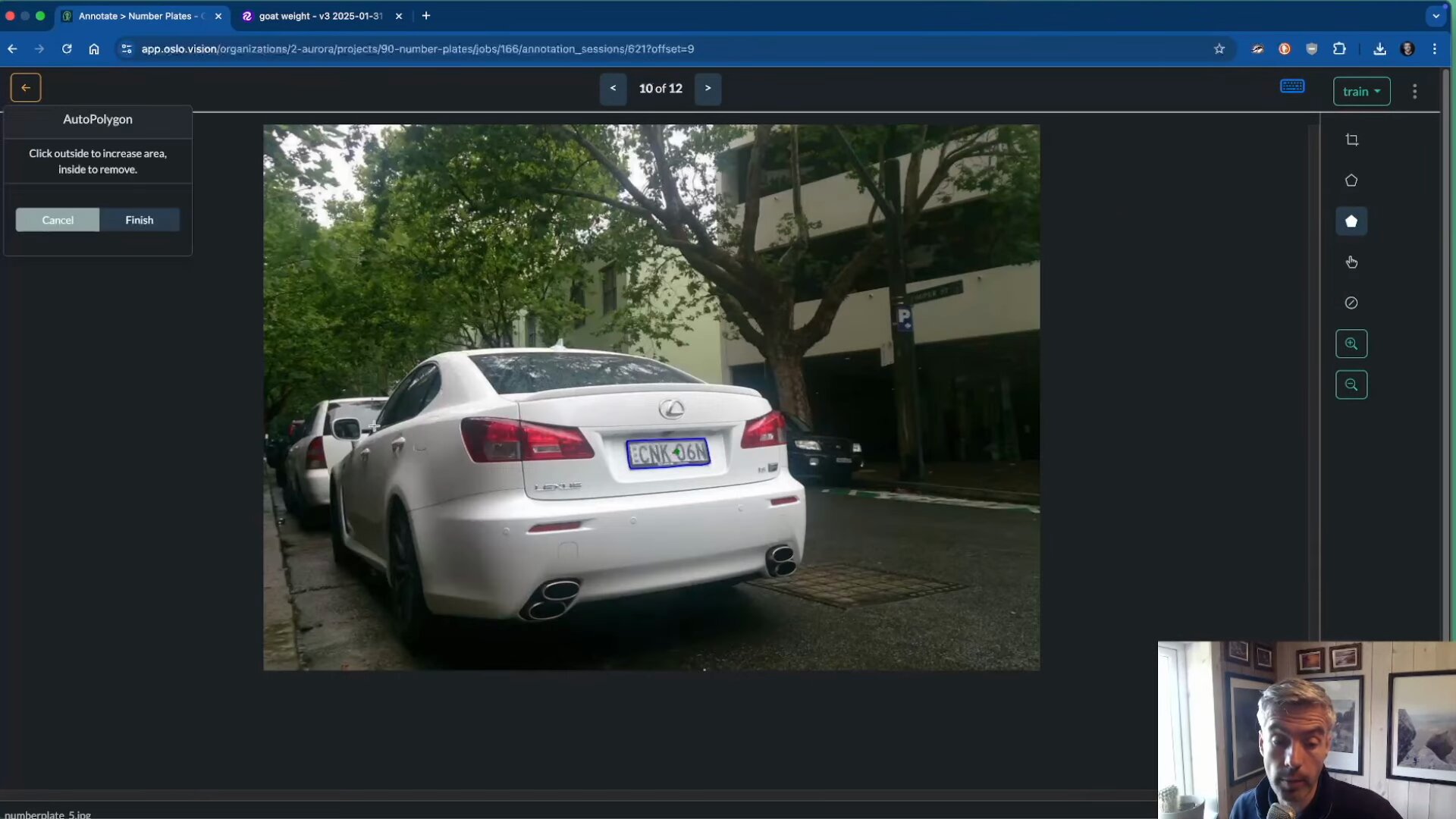

2. Creating and Training a Dataset

Next, we turned our attention to developing our own dataset for number plate detection. This involved using the oslo.vision platform to upload images of number plates and annotate them effectively.

Steps to Create the Dataset:

- Image Upload: I uploaded images of cars with visible number plates.

- Annotation Process: Using the autopoly tool, I annotated the images to facilitate segmentation rather than traditional bounding boxes.

- Model Training: We opted for the YOLO V8 small segmentation model as it is supported on the hailo hardware. The training process was straightforward, and the model was trained to recognize number plates specifically. We trained the model for about 100 epochs, which took roughly 30 minutes on google colab free.

annotate dataset in oslo

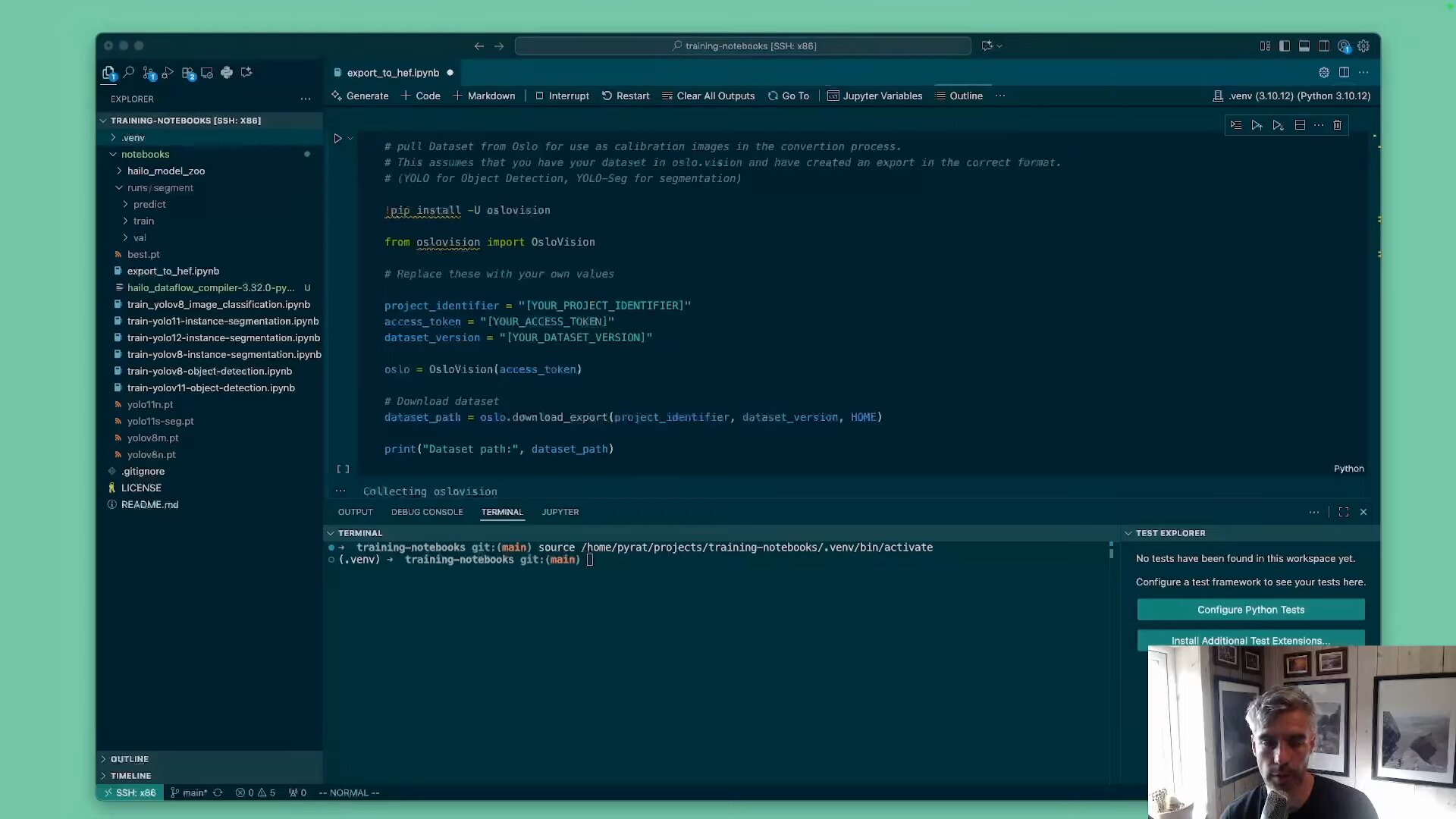

3. Converting the Model for Halo

Once the model was trained, the next step was to convert it into a format compatible with the Halo AI accelerator. This involved using the Halo SDK to optimize the model for performance on the Raspberry Pi 5.

Conversion Steps:

I recommend using the Export to HEF Notebook provided by Oslo Vision. This notebook guides you through the process of converting your trained model into the HEF format required by the Halo hardware.

export to hef

The youtube video above shows the entire process, but specifically here is the hef export process

4. Running Inference on NYC Driving Videos

Once our dataset was ready and the model trained, it was time to put it to the test. I ran the newly trained model on a two-minute video of driving through New York City.

Key Observations:

Detection Accuracy: The accuracy was very impressive, almost running at 100% for number plates, although there were some false positives and missed detections. Often street signs were misidentified as number plates. Either we could create a pipeline and detect cars first, then only look for number plates within the car bounding boxes, or we could train a more robust model with a larger dataset, or we could add classes for street signs and other common false positives.

Real-World Application: The results demonstrated that the Raspberry Pi can be a viable solution for real-time detection, although further refinement is needed for accuracy.

nyc driving footage

For a proper example of the interference results, jump to the inference results section

5. Future Steps and Considerations

While the initial results were promising, there are several areas for improvement:

- Enhanced Data Annotation: Developing a more comprehensive dataset with diverse angles and lighting conditions can refine the model’s accuracy.

- Higher Resolution Input: Testing with a 4K camera could yield better detection results, especially for number plates.

- Optimizing Code: Improving the codebase for better memory management will enhance performance, threading in Python can be tricky and I struggled with some OOM issues before simplifying the pipeline to be single threaded.

Conclusion

In conclusion, the Raspberry Pi 5, coupled with the Halo AI accelerator, has shown that it can perform real-time number plate detection with reasonable accuracy. Although there are challenges like model refinement and potential hardware limitations, the journey has just begun.

Stay tuned for the next installment, where we will make the Pi a full end-to-end Car Loan Detector!